Ollama Gemma3 Model Open WebUI Remote Access Tutorial

Update Date:2025-07-18 13:48:10

Local deployment of Ollama with open source visual models Gemma3 and Open WebUI can not only run powerful multi-modal large models offline, but also realize convenient interaction through graphical interface, taking into account privacy, security and ease of use.

The following is a complete detailed process for deploying the Ollama Gemma3 model Open WebUI locally. It is suitable for Windows environments. In the future, you can also use Aweray Aweshell for intranet penetration to realize remote access. Moreover, there is no need for public network IP or router configuration. The operation is very simple!

How do 1. deploy Gemma3 models with Ollama?

1. Install Ollama

Go to Ollama's official website (ollama.com) to download the installation package, install it with one click, and start Ollama.

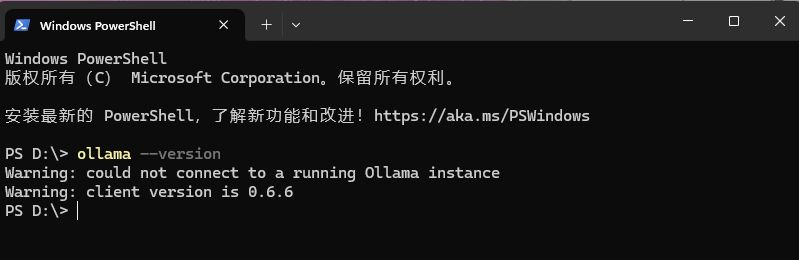

After the installation is complete, open the command line (CMD or PowerShell) to test whether the installation is successful:

'ollama -- version'

2. Run Gemma3 model

Gemma3 is an open source visual/language model series released by Google. It can be directly enabled by using the following command. The model will be automatically downloaded during the first run. Depending on the selected parameter size, hundreds of MB to several GB of disk space may be required. After the download is completed, the model will be automatically loaded.

'ollama run gemma3'

How do 2. install OpenWebUI with Docker?

1. Install Docker

Go to the Docker official website (docker.com) to download the installation package, install it with one click, and start Docer.

2. Open command prompt to pull Open WebUI Docker image

' docker pull ghcr.io/open-webui/open-webui:main '

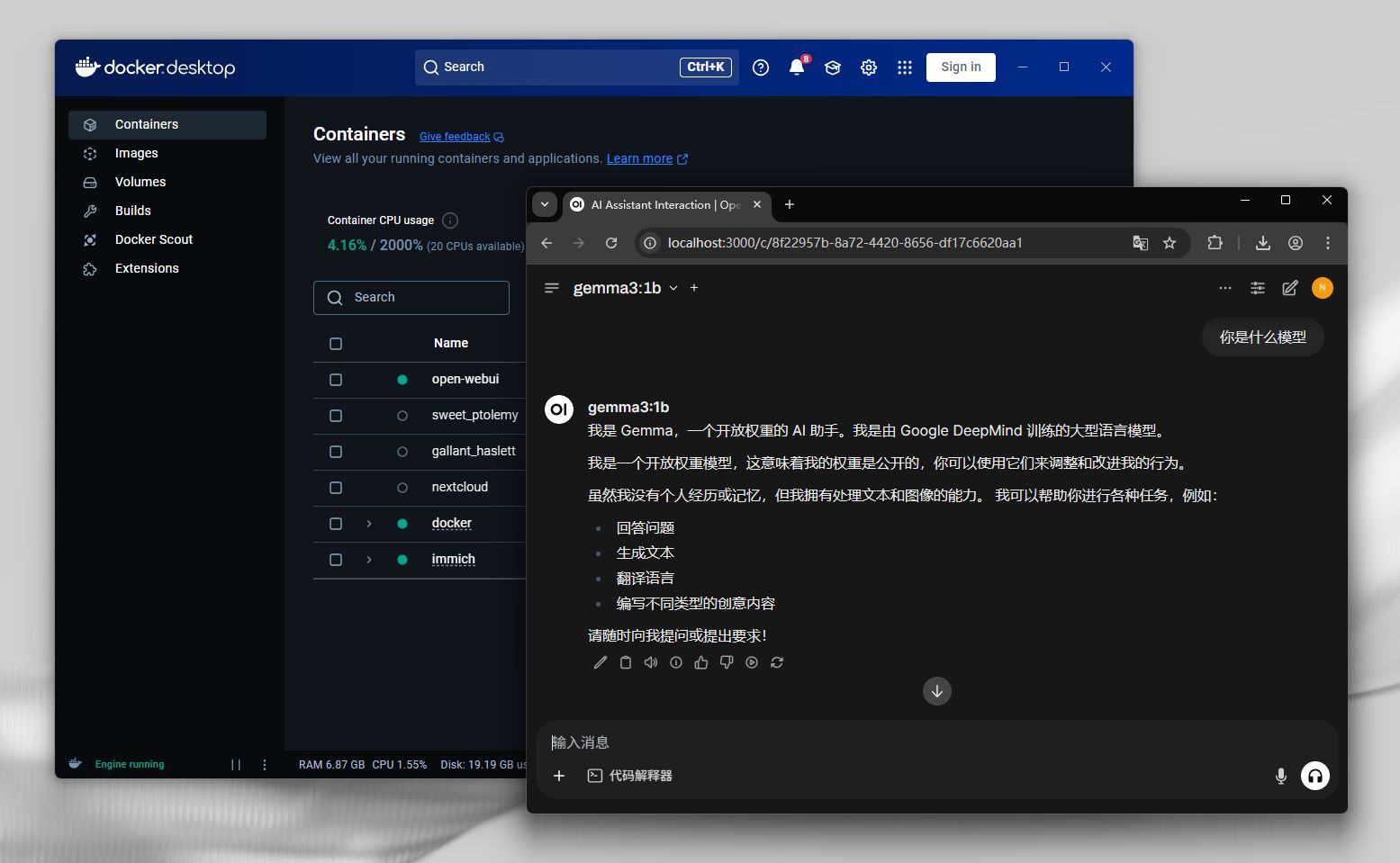

3. Start the container and bind Ollama

'docker run-d '

'-p 3000:8080 '

'-e OLLAMA_BASE_URL=http://host.docker.internal:11434 '

'-v open-webui:/app/backend/data '

'--name open-webui '

'ghcr.io/open-webui/open-webui:main'

-p 3000:8080: local open port is 3000 The OLLAMA_BASE_URL points to the Ollama service address on your host (under Windows, you can directly use host.docker.internal)

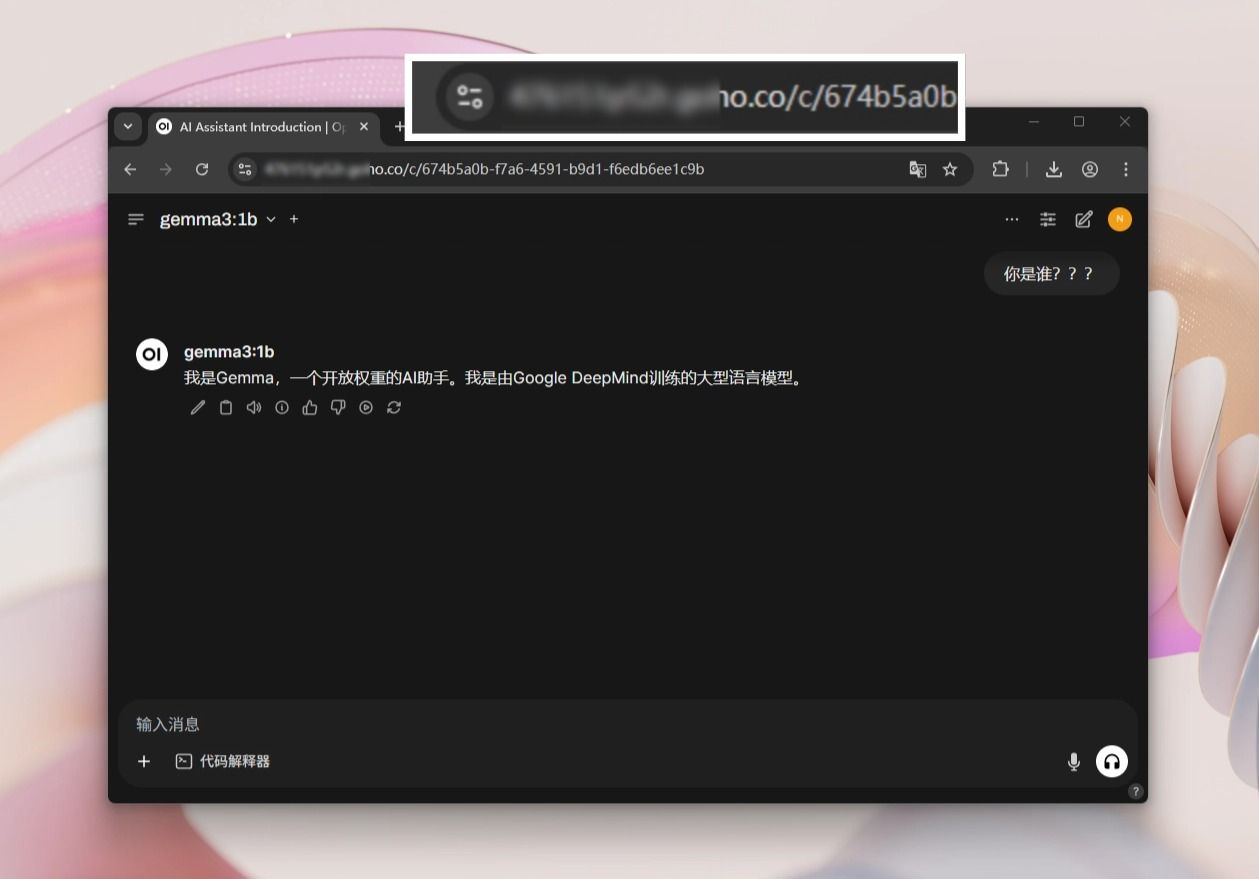

After successful access to the local address (localhost:3000), a page similar to the ChatGPT will appear. Gemma should be visible in the model selection on the left, and you can start interacting with the model.

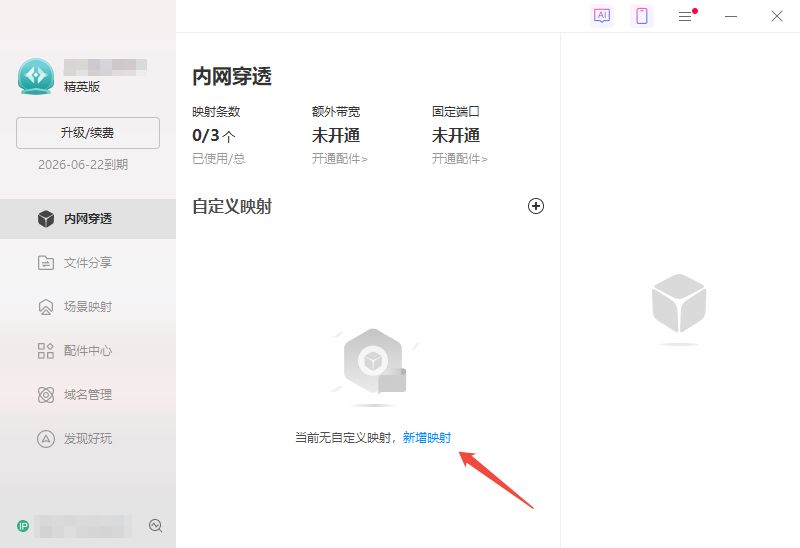

Use Aweshell intranet to penetrate and map Open WebUI to realize remote access

- Go to the Aweray Aweshell official website, download and install the client according to the system version, then start the Aweshell client and log in.

- According to the client interface prompt, enter the Aweray Aweshell cloud background, add HTTPS/HTTP mapping, point to the local 3000 port, and generate a fixed access link with one key.

- Remote Access Use the fixed access link generated by the Aweray Aweshell to directly access the locally deployed Open WebUI.

Security Configuration

It is recommended that for Web applications such as Open WebUI, password authentication for Aweray Aweshell can be enabled to add additional authentication to the webpage class map to prevent unauthorized access.

HTTPS mapping can be selected first to realize encrypted transmission. BeiRu Aweshell supports one-click HTTPS mapping without locally deployed certificates.

If necessary, you can also enable the precise access control function of the Aweray Aweshell, and make more specific restrictions on the allowed access time/period, IP address/area, browser/system version, etc., and only allow a specific range of use.

So far, from model deployment to graphical interface to remote access, a complete set of local AI application solutions based on Ollama Gemma3 Open WebUI has been built. Through Aweray Aweshell intranet penetration, not only breaks the restrictions of public network IP and network environment, but also enables your multi-modal large model application to have "anytime, anywhere, safe and controllable" remote access capability. Whether it's personal experimentation, LAN deployment, or in-house model debugging, the combination is highly available and scalable.

Did the content of the document help you?

If you encounter product-related problems, you can consult Online customer service Ask for help.

Related issues

Related issues

Other issues

Other issues